How Do Convolutional Layers Work in Deep Learning Neural Networks?

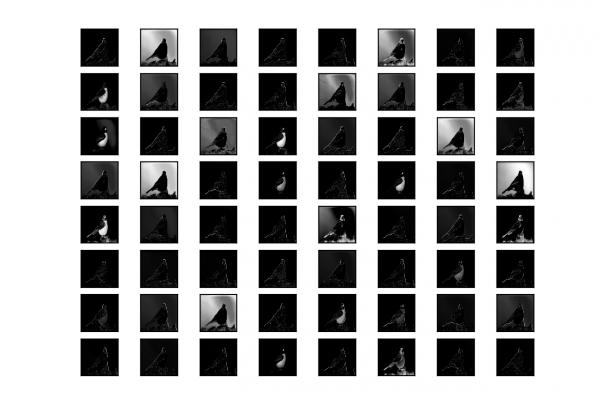

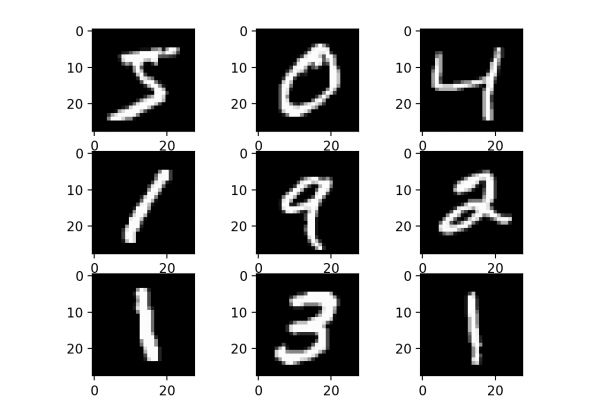

Last Updated on April 17, 2020 Convolutional layers are the major building blocks used in convolutional neural networks. A convolution is the simple application of a filter to an input that results in an activation. Repeated application of the same filter to an input results in a map of activations called a feature map, indicating the locations and strength of a detected feature in an input, such as an image. The innovation of convolutional neural networks is the ability to […]

Read more