A Gentle Introduction to the Challenge of Training Deep Learning Neural Network Models

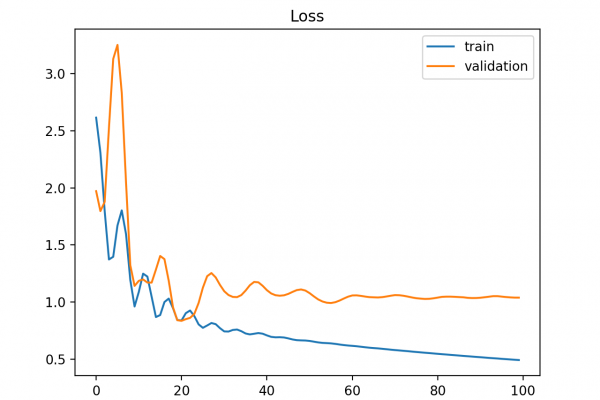

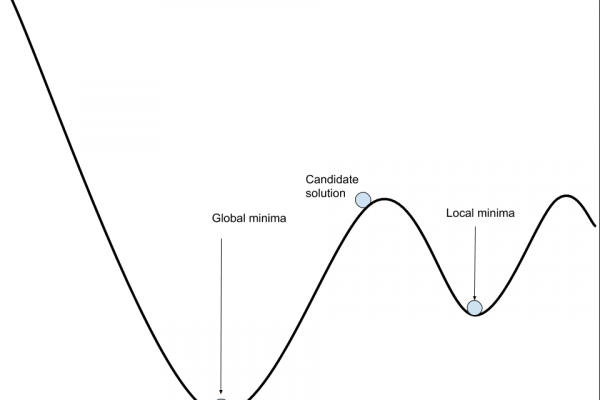

Last Updated on August 6, 2019 Deep learning neural networks learn a mapping function from inputs to outputs. This is achieved by updating the weights of the network in response to the errors the model makes on the training dataset. Updates are made to continually reduce this error until either a good enough model is found or the learning process gets stuck and stops. The process of training neural networks is the most challenging part of using the technique in […]

Read more