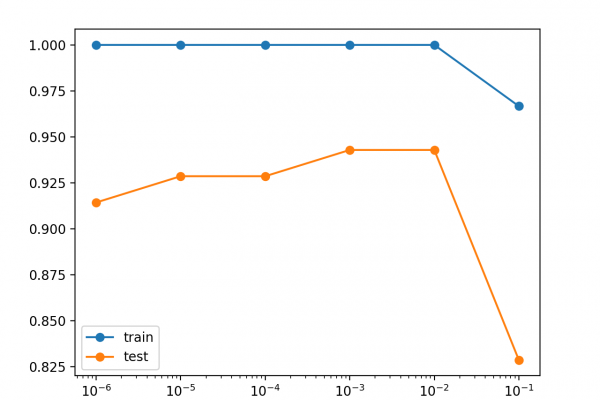

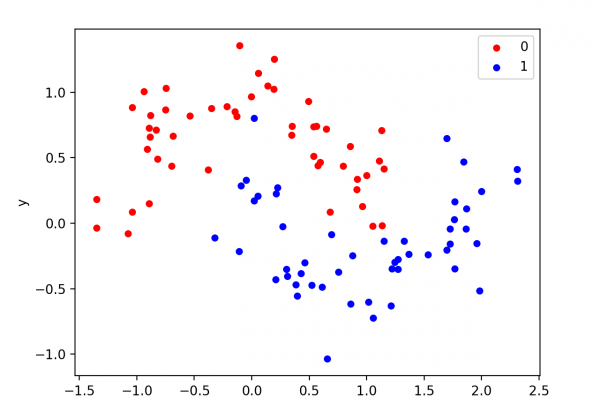

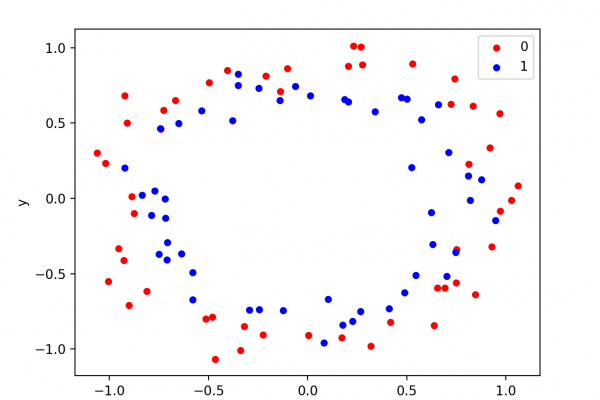

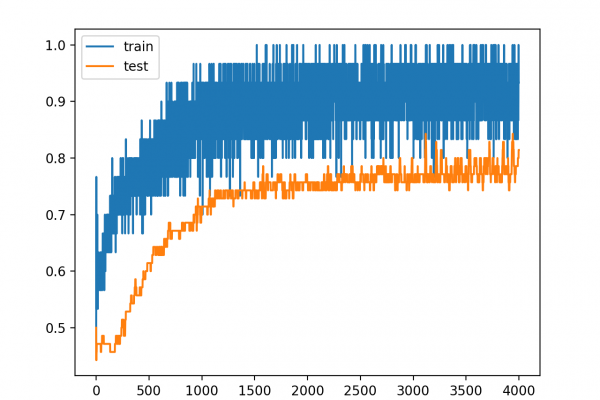

Use Weight Regularization to Reduce Overfitting of Deep Learning Models

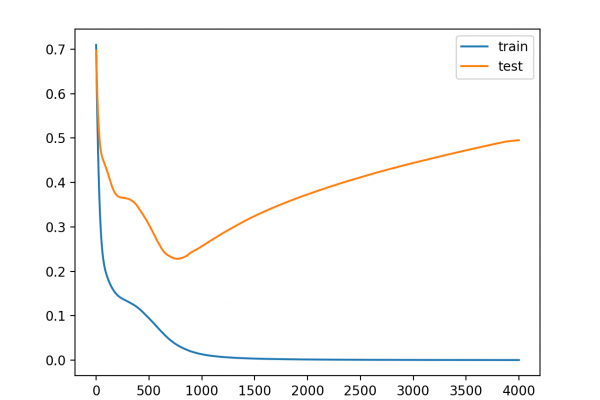

Last Updated on August 6, 2019 Neural networks learn a set of weights that best map inputs to outputs. A network with large network weights can be a sign of an unstable network where small changes in the input can lead to large changes in the output. This can be a sign that the network has overfit the training dataset and will likely perform poorly when making predictions on new data. A solution to this problem is to update the […]

Read more