How to Generate Random Numbers in Python

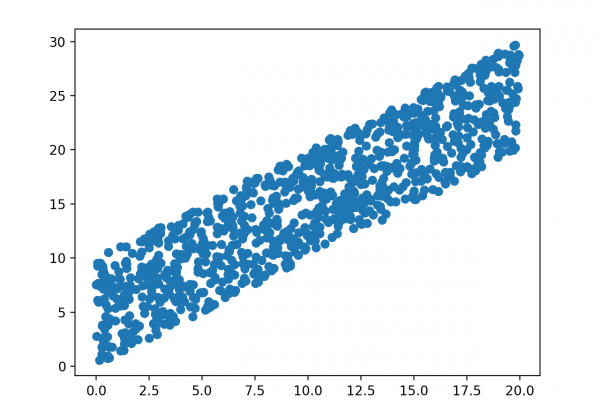

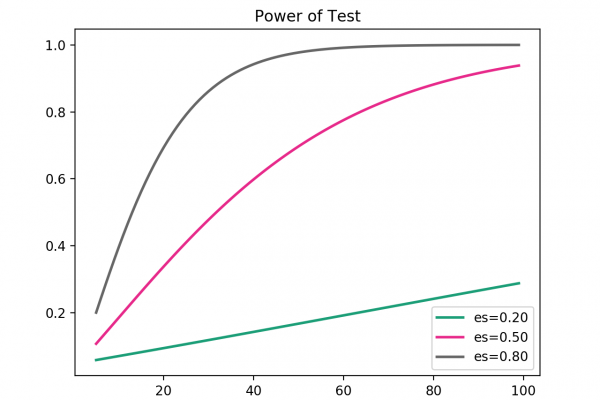

Last Updated on September 4, 2020 The use of randomness is an important part of the configuration and evaluation of machine learning algorithms. From the random initialization of weights in an artificial neural network, to the splitting of data into random train and test sets, to the random shuffling of a training dataset in stochastic gradient descent, generating random numbers and harnessing randomness is a required skill. In this tutorial, you will discover how to generate and work with random […]

Read more