Encoder-Decoder Long Short-Term Memory Networks

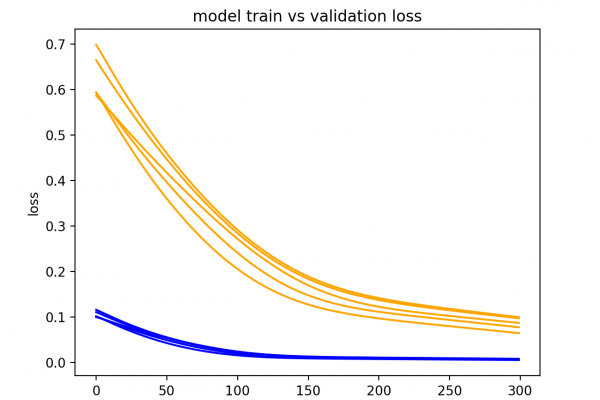

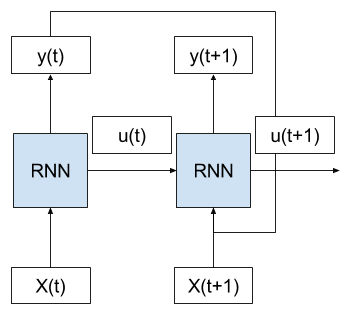

Last Updated on August 14, 2019 Gentle introduction to the Encoder-Decoder LSTMs forsequence-to-sequence prediction with example Python code. The Encoder-Decoder LSTM is a recurrent neural network designed to address sequence-to-sequence problems, sometimes called seq2seq. Sequence-to-sequence prediction problems are challenging because the number of items in the input and output sequences can vary. For example, text translation and learning to execute programs are examples of seq2seq problems. In this post, you will discover the Encoder-Decoder LSTM architecture for sequence-to-sequence prediction. After […]

Read more