How to Develop Your First XGBoost Model in Python with scikit-learn

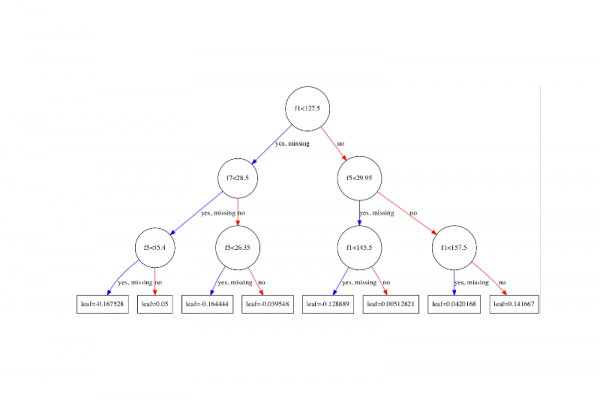

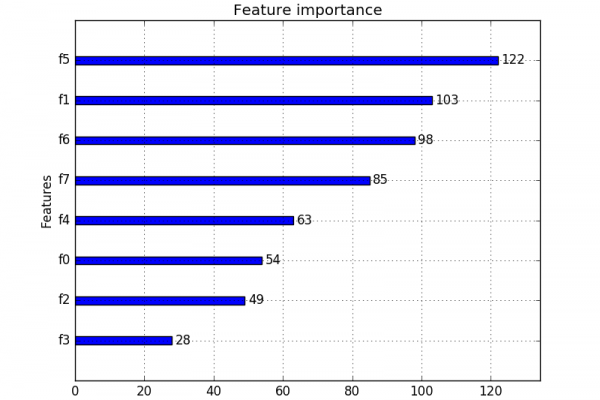

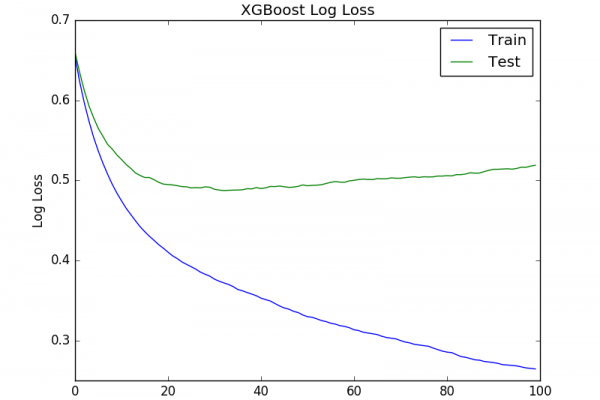

Last Updated on August 27, 2020 XGBoost is an implementation of gradient boosted decision trees designed for speed and performance that is dominative competitive machine learning. In this post you will discover how you can install and create your first XGBoost model in Python. After reading this post you will know: How to install XGBoost on your system for use in Python. How to prepare data and train your first XGBoost model. How to make predictions using your XGBoost model. Kick-start […]

Read more