Basin Hopping Optimization in Python

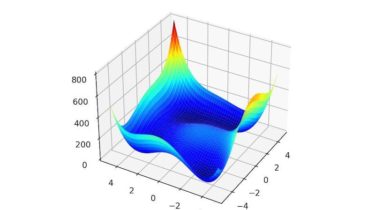

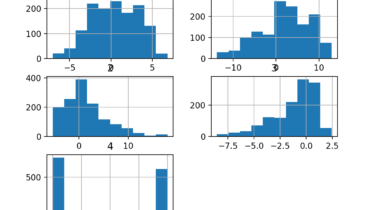

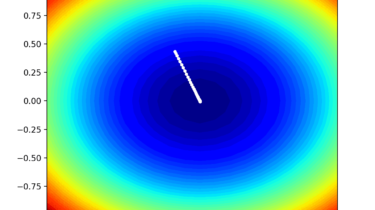

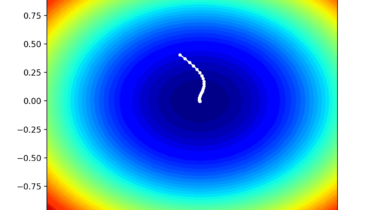

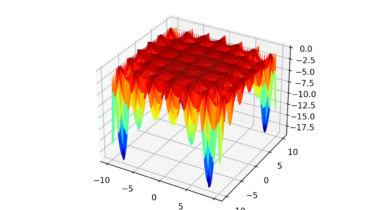

Basin hopping is a global optimization algorithm. It was developed to solve problems in chemical physics, although it is an effective algorithm suited for nonlinear objective functions with multiple optima. In this tutorial, you will discover the basin hopping global optimization algorithm. After completing this tutorial, you will know: Basin hopping optimization is a global optimization that uses random perturbations to jump basins, and a local search algorithm to optimize each basin. How to use the basin hopping optimization algorithm […]

Read more