LinkBERT: A Knowledgeable Language Model Pretrained with Document Links

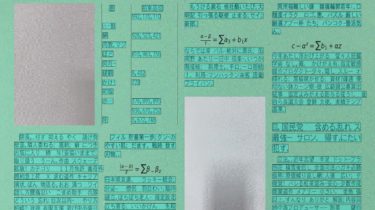

This repo provides the model, code & data of our paper: LinkBERT: Pretraining Language Models with Document Links (ACL 2022). @InProceedings{yasunaga2022linkbert, author = {Michihiro Yasunaga and Jure Leskovec and Percy Liang}, title = {LinkBERT: Pretraining Language Models with Document Links}, year = {2022}, booktitle = {Association for Computational Linguistics (ACL)}, } Overview LinkBERT is a new pretrained language model (improvement of BERT) that captures document links such as hyperlinks and citation

Read more