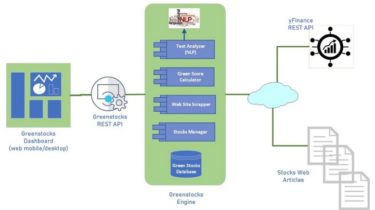

An online application powered by machine learning algorithms

About GIA Greenstocks Investment Advisor (GIA) is an online application powered by machine learning algorithms, that facilitates reliable stock market investments in companies that implement a high Environmental, Social and Governance (ESG) policy. What is ESG Investing Investors interested in ESG are increasingly applying these non-financial factors as part of their analysis process to identify material risks and growth opportunities. ESG metrics are not commonly part of mandatory financial reporting, though companies are increasingly making disclosures in their annual report […]

Read more