BERT Explained

The continuous innovation around contextual understanding of sentences has expanded significant bounds in NLP.

The general idea of Transformer architecture is based on self-attention proposed in Attention is All You Need paper 2017.

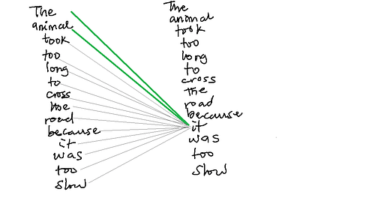

Self-attention is learning to weigh the relationship between each item/word to