An autoregressive model that relies solely on shifting along the sequence dimension

Token Shift GPT

Implementation of Token Shift GPT – An autoregressive model that relies solely on shifting along the sequence dimension and feedforwards.

Update: Inexplicably, it actually works quite well. The feedforward module follows the same design as gMLP, except the feature dimension of the gate tensor is divided up into log2(seq_len) chunks, and the mean pool of the past consecutive segments (length 1, 2, 4, 8, etc. into the past) are shifted into each chunk before a projection along the feature dimension.

Install

$ pip install token-shift-gpt

Usage

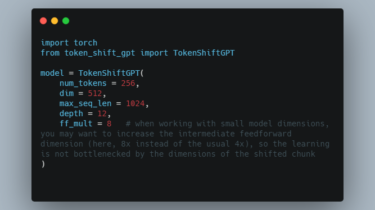

import torch

from token_shift_gpt import TokenShiftGPT

model = TokenShiftGPT(

num_tokens = 256,

dim = 512,

max_seq_len = 1024,

depth = 12,

ff_mult = 8 # when working with small model dimensions, you may want to