A Python package to facilitate research on building and evaluating automated scoring models

Automated scoring of written and spoken test responses is a growing field in educational natural language processing. Automated scoring engines employ machine learning models to predict scores for such responses based on features extracted from the text/audio of these responses. Examples of automated scoring engines include Project Essay Grade for written responses and SpeechRater for spoken responses.

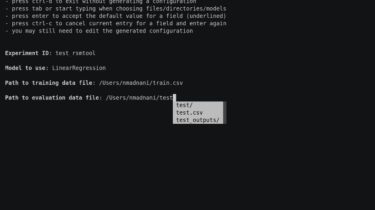

Rater Scoring Modeling Tool (RSMTool) is a python package which automates and combines in a single pipeline multiple analyses that are commonly conducted when building and evaluating such scoring models. The output of RSMTool is a comprehensive, customizable HTML statistical report that contains the output of these multiple analyses. While RSMTool does make it really simple to run a set of standard analyses using a single command, it is