A novel attention-based architecture for vision-and-language navigation

Episodic Transformers (E.T.)

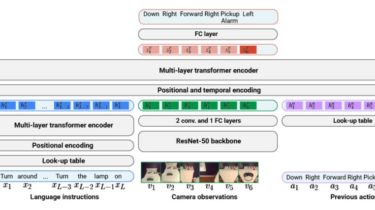

Episodic Transformer (E.T.) is a novel attention-based architecture for vision-and-language navigation. E.T. is based on a multimodal transformer that encodes language inputs and the full episode history of visual observations and actions. This code reproduces the results obtained with E.T. on ALFRED benchmark. To learn more about the benchmark and the original code, please refer to ALFRED repository.

Quickstart

Clone repo:

$ git clone https://github.com/alexpashevich/E.T..git ET

$ export ET_ROOT=$(pwd)/ET

$ export ET_LOGS=$ET_ROOT/logs

$ export ET_DATA=$ET_ROOT/data

$ export PYTHONPATH=$PYTHONPATH:$ET_ROOT

Install requirements:

$ virtualenv -p $(which python3.7) et_env

$ source et_env/bin/activate

$ cd $ET_ROOT

$ pip install --upgrade pip

$ pip install -r requirements.txt

Downloading data and checkpoints

Download ALFRED dataset:

$ cd $ET_DATA

$ sh download_data.sh json_feat

Copy