A look-ahead multi-entity Transformer for modeling coordinated agents in python

baller2vec++

This is the repository for the paper:

Michael A. Alcorn and Anh Nguyen. baller2vec++: A Look-Ahead Multi-Entity Transformer For Modeling Coordinated Agents. arXiv. 2021.

|

|---|

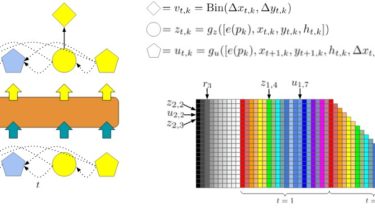

To learn statistically dependent agent trajectories, baller2vec++ uses a specially designed self-attention mask to simultaneously process three different sets of features vectors in a single Transformer. The three sets of feature vectors consist of location feature vectors like those found in baller2vec, look-ahead trajectory feature vectors, and starting location feature vectors. This design allows the model to integrate information about concurrent agent trajectories through multiple Transformer layers without seeing the future (in contrast to baller2vec). |

|

|

|

|---|---|---|

| Training sample | baller2vec |

baller2vec++ |

When trained on a dataset of perfectly coordinated agent trajectories, the