A Gentle Introduction to Mixture of Experts Ensembles

Mixture of experts is an ensemble learning technique developed in the field of neural networks.

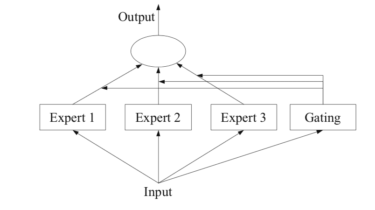

It involves decomposing predictive modeling tasks into sub-tasks, training an expert model on each, developing a gating model that learns which expert to trust based on the input to be predicted, and combines the predictions.

Although the technique was initially described using neural network experts and gating models, it can be generalized to use models of any type. As such, it shows a strong similarity to stacked generalization and belongs to the class of ensemble learning methods referred to as meta-learning.

In this tutorial, you will discover the mixture of experts approach to ensemble learning.

After completing this tutorial, you will know:

- An intuitive