Efficient Certification of Spatial Robustness

Recent work has exposed the vulnerability of computer vision models to spatial transformations. Due to the widespread usage of such models in safety-critical applications, it is crucial to quantify their robustness against spatial transformations...

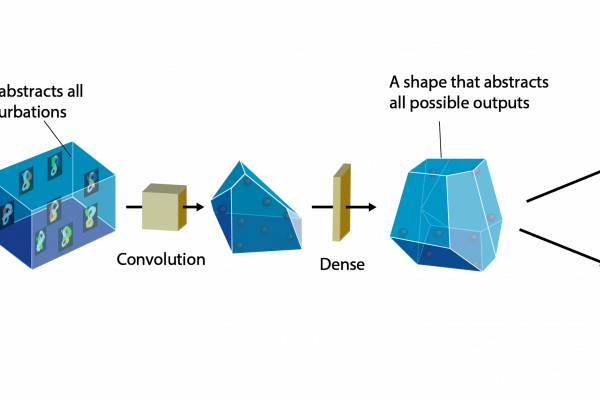

However, existing work only provides empirical quantification of spatial robustness via adversarial attacks, which lack provable guarantees. In this work, we propose novel convex relaxations, which enable us, for the first time, to provide a certificate of robustness against spatial transformations. Our convex relaxations are model-agnostic and can be leveraged by a wide range of neural network verifiers. Experiments on several network architectures and different datasets demonstrate the effectiveness and scalability of our method.