Versatile Multi-Modal Pre-Training for Human-Centric Perception

1S-Lab, Nanyang Technological University

2SenseTime Research

3Shanghai AI Laboratory

Accepted to CVPR 2022

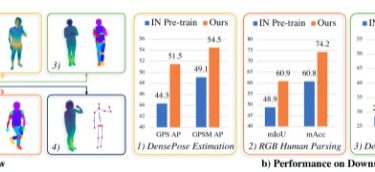

This repository contains the official implementation of Versatile Multi-Modal Pre-Training for Human-Centric Perception. For brevity, we name our method HCMoCo.

arXiv •

Project Page •

Dataset

Citation

If you find our work useful for your research, please consider citing the paper:

Updates

[03/2022] Code release!

[03/2022] HCMoCo is accepted to CVPR 2022🥳!

Installation

We recommend using conda to manage the python environment. The commands below are provided for your reference.