A complete, self-contained example for training ImageNet at state-of-the-art speed with FFCV

A minimal, single-file PyTorch ImageNet training script designed for hackability. Run train_imagenet.py to get…

- …high accuracies on ImageNet

- …with as many lines of code as the PyTorch ImageNet example

- …in 1/10th the time.

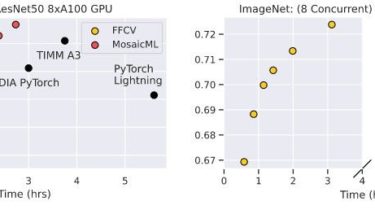

Results

Train models more efficiently, either with 8 GPUs in parallel or by training 8 ResNet-18’s at once.

See benchmark setup here: https://docs.ffcv.io/benchmarks.html.

Citation

If you use this setup in your research, cite:

@misc{leclerc2022ffcv,

author = {Guillaume Leclerc and Andrew Ilyas and Logan Engstrom and Sung Min Park and Hadi Salman and Aleksander Madry},

title = {ffcv},

year = {2022},

howpublished = {url{https://github.com/libffcv/ffcv/}},

note = {commit xxxxxxx}

}

Configurations

The configuration files corresponding to the above results are:

| Link to Config | top_1 | top_5 | # Epochs | Time (mins) | Architecture | Setup |

|---|---|---|---|---|---|---|

| Link | 0.784 | 0.941 | 88 | 77.2 | ResNet-50 | 8

|