Cosine Annealing With Warmup

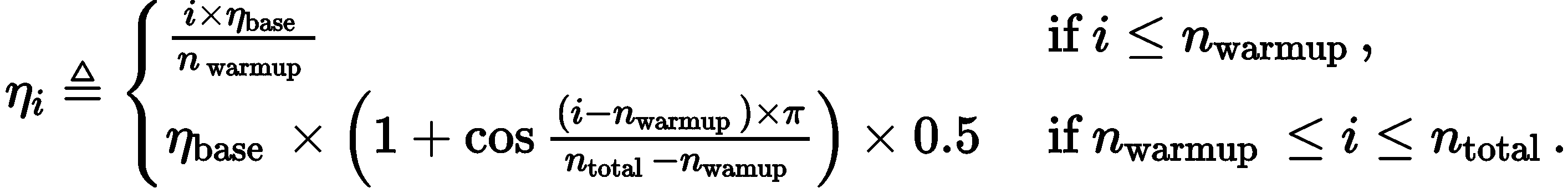

Formulation

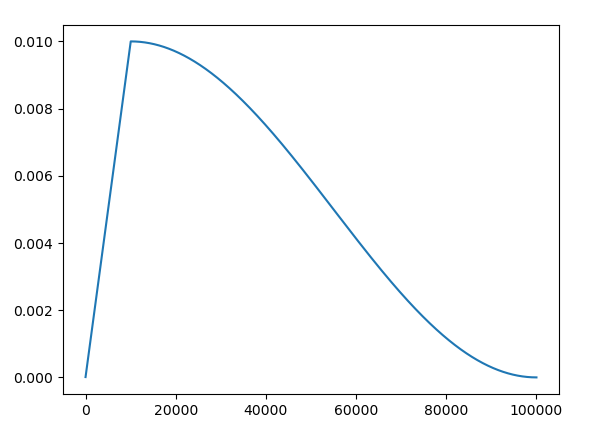

The learning rate is annealed using a cosine schedule over the course of learning of n_total total steps with an initial warmup period of n_warmup steps. Hence, the learning rate at step i is computed as:

Learning rate will be changed as:

Usage

# optimizer, warmup_epochs, warmup_lr, num_epochs, base_lr, final_lr, iter_per_epoch

lr_scheduler = LR_Scheduler(

optimizer,

args.warmup_epochs, args.warmup_lr