Closing the generalization gap in large batch training of neural networks

Train longer, generalize better – Big batch training

This is a code repository used to generate the results appearing in “Train longer, generalize better: closing the generalization gap in large batch training of neural networks” By Elad Hoffer, Itay Hubara and Daniel Soudry.

It is based off convNet.pytorch with some helpful options such as:

- Training on several datasets

- Complete logging of trained experiment

- Graph visualization of the training/validation loss and accuracy

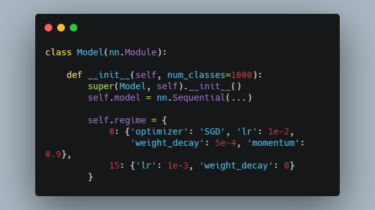

- Definition of preprocessing and optimization regime for each model

Dependencies

Data

- Configure your dataset path at data.py.

- To get the ILSVRC data, you should register on their site for access: http://www.image-net.org/

Experiment examples

python main_normal.py --dataset cifar10 --model resnet --save cifar10_resnet44_bs2048_lr_fix --epochs 100 --b 2048 --lr_bb_fix;

python main_normal.py --dataset cifar10