Multi-Task Vision and Language Representation Learning

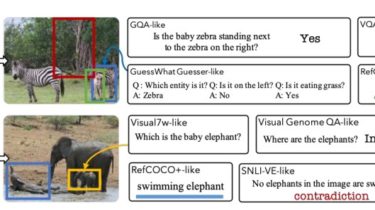

12-in-1: Multi-Task Vision and Language Representation Learning

Please cite the following if you use this code. Code and pre-trained models for 12-in-1: Multi-Task Vision and Language Representation Learning:

@InProceedings{Lu_2020_CVPR,

author = {Lu, Jiasen and Goswami, Vedanuj and Rohrbach, Marcus and Parikh, Devi and Lee, Stefan},

title = {12-in-1: Multi-Task Vision and Language Representation Learning},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}

and ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks:

@inproceedings{lu2019vilbert,

title={Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks},

author={Lu, Jiasen and Batra, Dhruv and Parikh, Devi and Lee, Stefan},

booktitle={Advances in Neural Information Processing Systems},

pages={13--23},

year={2019}

}

Repository Setup

- Create a fresh conda environment, and install all dependencies.

conda