A library for finding knowledge neurons in pretrained transformer models

knowledge-neurons

An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the technique to autoregressive models, as well as MLMs.

The Huggingface Transformers library is used as the backend, so any model you want to probe must be implemented there.

Currently integrated models:

BERT_MODELS = ["bert-base-uncased", "bert-base-multilingual-uncased"]

GPT2_MODELS = ["gpt2"]

GPT_NEO_MODELS = [

"EleutherAI/gpt-neo-125M",

"EleutherAI/gpt-neo-1.3B",

"EleutherAI/gpt-neo-2.7B",

]

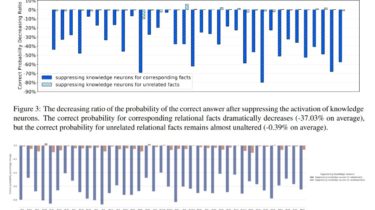

The technique from Dai et al. has been used to locate knowledge neurons in the huggingface bert-base-uncased model for all the head/relation/tail entities in the PARAREL dataset. Both the neurons, and more detailed results of the experiment are published at bert_base_uncased_neurons/*.json and can be replicated by running pararel_evaluate.py. More details in the Evaluations on the PARAREL dataset section.

Either clone