Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning

Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning

Sandeep Subramanian, Adam Trischler, Yoshua Bengio & Christopher Pal

ICLR 2018

About

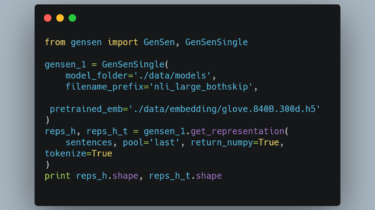

GenSen is a technique to learn general purpose, fixed-length representations of sentences via multi-task training. These representations are useful for transfer and low-resource learning. For details please refer to our ICLR paper.

Code

We provide a PyTorch implementation of our paper along with pre-trained models as well as code to evaluate these models on a variety of transfer learning benchmarks.

Requirements

- Python 2.7 (Python 3 compatibility coming soon)

- PyTorch 0.2 or 0.3

- nltk

- h5py

- numpy

- scikit-learn

Usage

Setting up Models & pre-trained word vecotrs

You download our pre-trained models and set up pre-trained word vectors for vocabulary expansion by