Poincaré Embeddings for Learning Hierarchical Representations

Poincaré Embeddings for Learning Hierarchical Representations

PyTorch implementation of Poincaré Embeddings for Learning Hierarchical Representations

Installation

Simply clone this repository via

git clone https://github.com/facebookresearch/poincare-embeddings.git

cd poincare-embeddings

conda env create -f environment.yml

source activate poincare

python setup.py build_ext --inplace

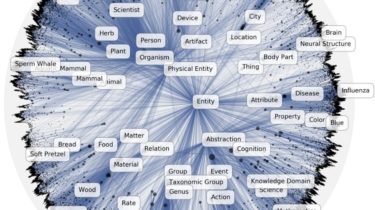

Example: Embedding WordNet Mammals

To embed the transitive closure of the WordNet mammals subtree, first generate the data via

cd wordnet

python transitive_closure.py

This will generate the transitive closure of the full noun hierarchy as well as of the mammals subtree of WordNet.

To embed the mammals subtree in the reconstruction setting (i.e., without missing data), go to the root directory of the project and run

./train-mammals.sh

This shell script includes the appropriate parameter settings for the mammals subtree and saves the trained model as mammals.pth.