Efficient Neural Architecture Search (ENAS) in PyTorch

PyTorch implementation of Efficient Neural Architecture Search via Parameters Sharing.

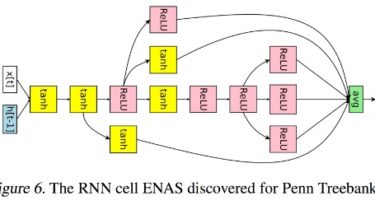

ENAS reduce the computational requirement (GPU-hours) of Neural Architecture Search (NAS) by 1000x via parameter sharing between models that are subgraphs within a large computational graph. SOTA on Penn Treebank language modeling.

Prerequisites

- Python 3.6+

- PyTorch==0.3.1

- tqdm, scipy, imageio, graphviz, tensorboardX

Usage

Install prerequisites with:

conda install graphviz

pip install -r requirements.txt

To train ENAS to discover a recurrent cell for RNN:

python main.py --network_type rnn --dataset ptb --controller_optim adam --controller_lr 0.00035

--shared_optim sgd --shared_lr 20.0 --entropy_coeff 0.0001

python main.py --network_type rnn --dataset wikitext

To train ENAS to discover CNN architecture (in progress):

python main.py --network_type cnn --dataset cifar --controller_optim momentum --controller_lr_cosine=True

--controller_lr_max 0.05 --controller_lr_min 0.0001 --entropy_coeff 0.1

or you can