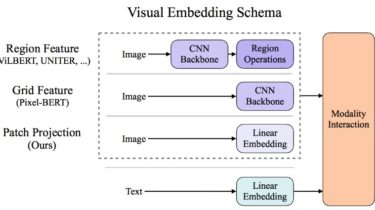

Vision-and-Language Transformer Without Convolution or Region Supervision

ViLT

Code for the ICML 2021 (long talk) paper: “ViLT: Vision-and-Language Transformer Without Convolution or Region Supervision”

Install

pip install -r requirements.txt

pip install -e .

Download Pretrained Weights

We provide five pretrained weights

- ViLT-B/32 Pretrained with MLM+ITM for 200k steps on GCC+SBU+COCO+VG (ViLT-B/32 200k) link

- ViLT-B/32 200k finetuned on VQAv2 link

- ViLT-B/32 200k finetuned on NLVR2 link

- ViLT-B/32 200k finetuned on COCO IR/TR link

- ViLT-B/32 200k finetuned on F30K IR/TR link

Out-of-the-box MLM + Visualization Demo

pip install gradio==1.6.4

python demo.py with num_gpus=<0 if you have no gpus else 1> load_path="/vilt_200k_mlm_itm.ckpt"

ex)

python demo.py with num_gpus=0 load_path="weights/vilt_200k_mlm_itm.ckpt"

Out-of-the-box VQA Demo

pip install