Mind Visual Tokens for Vision Transformer

So-ViT

This repository contains the source code under PyTorch framework and models trained on ImageNet-1K dataset for the following paper:

@articles{So-ViT,

author = {Jiangtao Xie, Ruiren Zeng, Qilong Wang, Ziqi Zhou, Peihua Li},

title = {So-ViT: Mind Visual Tokens for Vision Transformer},

booktitle = {arXiv:2104.10935},

year = {2021}

}

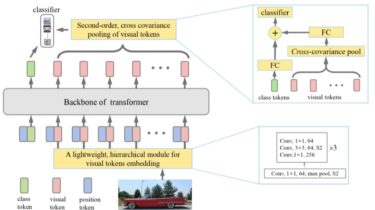

The Vision Transformer (ViT) heavily depends on pretraining using ultra large-scale datasets (e.g. ImageNet-21K or JFT-300M) to achieve high performance, while significantly underperforming on ImageNet-1K if trained from scratch. We propose a novel So-ViT model toward addressing this problem, by carefully considering the role of visual tokens.

Above all, for classification head, the ViT only exploits class token while entirely neglecting rich semantic information inherent in high-level visual tokens. Therefore, we propose a new classification paradigm, where the