Swapping Autoencoder for Deep Image Manipulation

swapping-autoencoder-pytorch

Official Implementation of Swapping Autoencoder for Deep Image Manipulation (NeurIPS 2020)

Taesung Park, Jun-Yan Zhu, Oliver Wang, Jingwan Lu, Eli Shechtman, Alexei A. Efros, Richard Zhang

UC Berkeley and Adobe Research

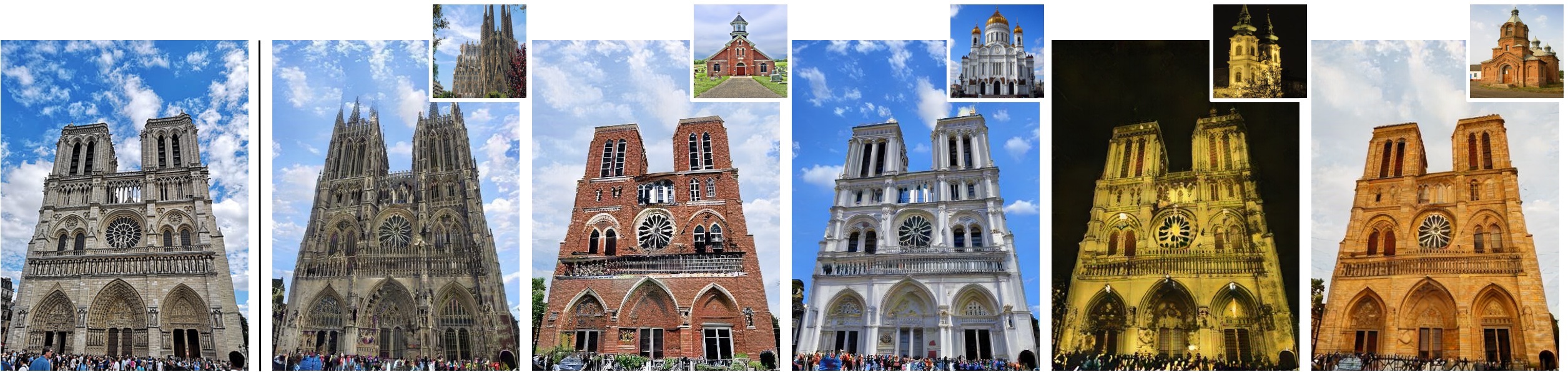

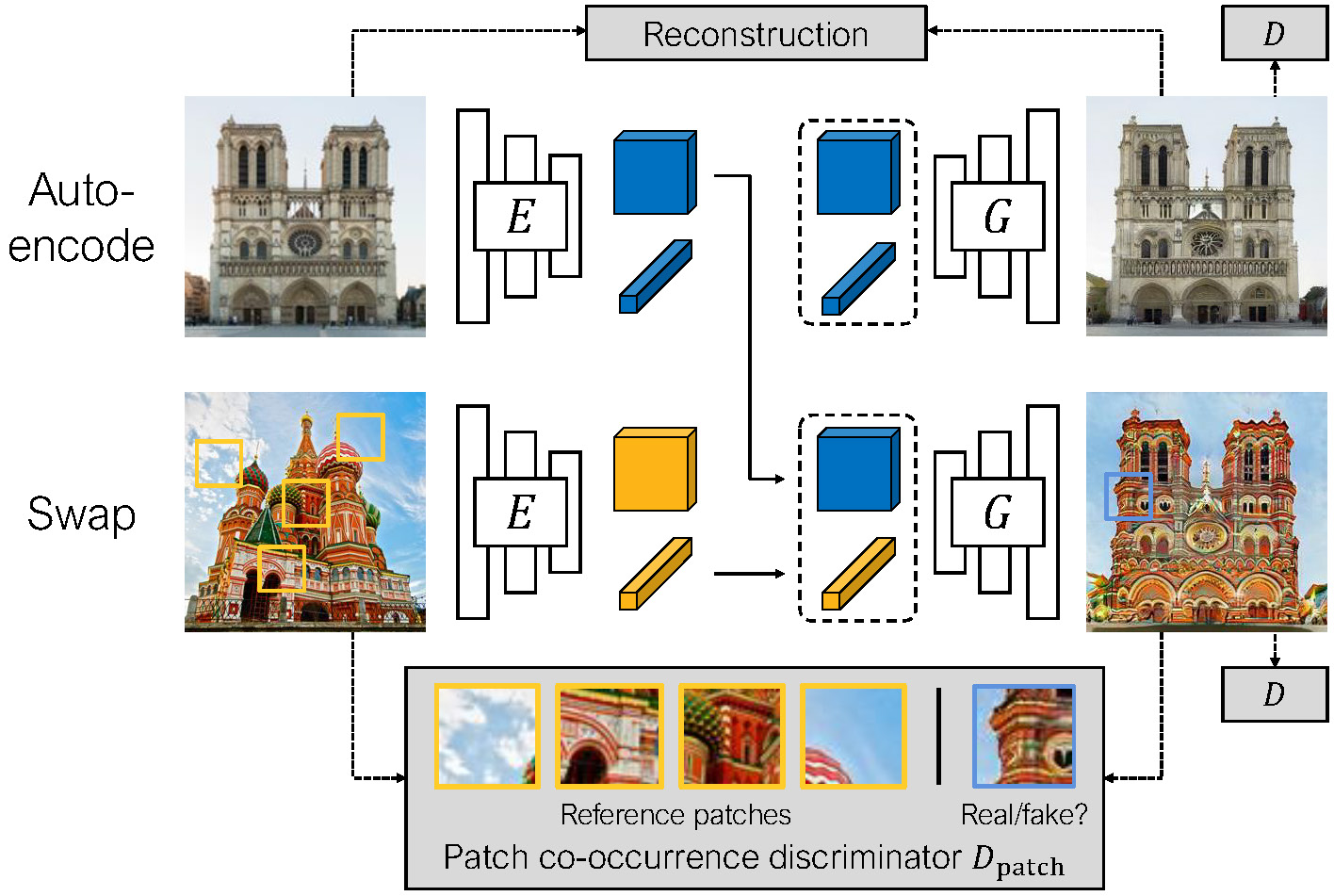

Overview

Swapping Autoencoder consists of autoencoding (top) and swapping (bottom) operation.

Top: An encoder E embeds an input (Notre-Dame) into two codes. The structure code is a tensor with spatial dimensions; the texture code is a 2048-dimensional vector. Decoding with generator G should produce a realistic image (enforced by discriminator D matching the input (reconstruction loss).

Bottom: Decoding with the texture code from a second image (Saint Basil’s Cathedral) should look realistic (via D) and match the texture of the image, by training with a patch co-occurrence discriminator Dpatch