An advanced quantization library written for PyTorch

Hessian AWare Quantization

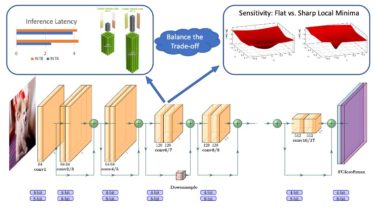

HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform quantization, with direct hardware implementation through TVM.

Installation

- PyTorch version >= 1.4.0

- Python version >= 3.6

- For training new models, you’ll also need NVIDIA GPUs and NCCL

- To install HAWQ and develop locally:

git clone https://github.com/Zhen-Dong/HAWQ.git

cd HAWQ

pip install -r requirements.txt

Getting Started

Quantization-Aware Training

An example to run uniform 8-bit quantization for resnet50 on ImageNet.

export CUDA_VISIBLE_DEVICES=0

python quant_train.py -a resnet50 --epochs 1 --lr 0.0001 --batch-size 128 --data /path/to/imagenet/ --pretrained --save-path /path/to/checkpoints/ --act-range-momentum=0.99 --wd 1e-4 --data-percentage 0.0001 --fix-BN --checkpoint-iter -1 --quant-scheme uniform8

The commands for other quantization schemes and for other networks are shown in the model