How to Improve Performance With Transfer Learning for Deep Learning Neural Networks

Last Updated on August 25, 2020

An interesting benefit of deep learning neural networks is that they can be reused on related problems.

Transfer learning refers to a technique for predictive modeling on a different but somehow similar problem that can then be reused partly or wholly to accelerate the training and improve the performance of a model on the problem of interest.

In deep learning, this means reusing the weights in one or more layers from a pre-trained network model in a new model and either keeping the weights fixed, fine tuning them, or adapting the weights entirely when training the model.

In this tutorial, you will discover how to use transfer learning to improve the performance deep learning neural networks in Python with Keras.

After completing this tutorial, you will know:

- Transfer learning is a method for reusing a model trained on a related predictive modeling problem.

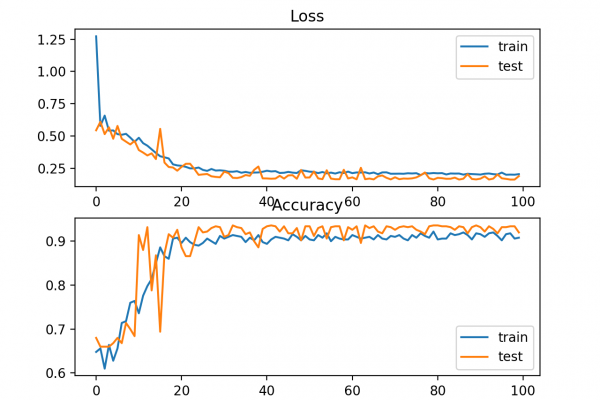

- Transfer learning can be used to accelerate the training of neural networks as either a weight initialization scheme or feature extraction method.

- How to use transfer learning to improve the performance of an MLP for a multiclass classification problem.

Kick-start your project with my new book Better

To finish reading, please visit source site