Unsupervised Cross-lingual Representation Learning

This post expands on the ACL 2019 tutorial on Unsupervised Cross-lingual Representation Learning.

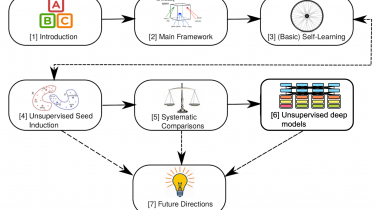

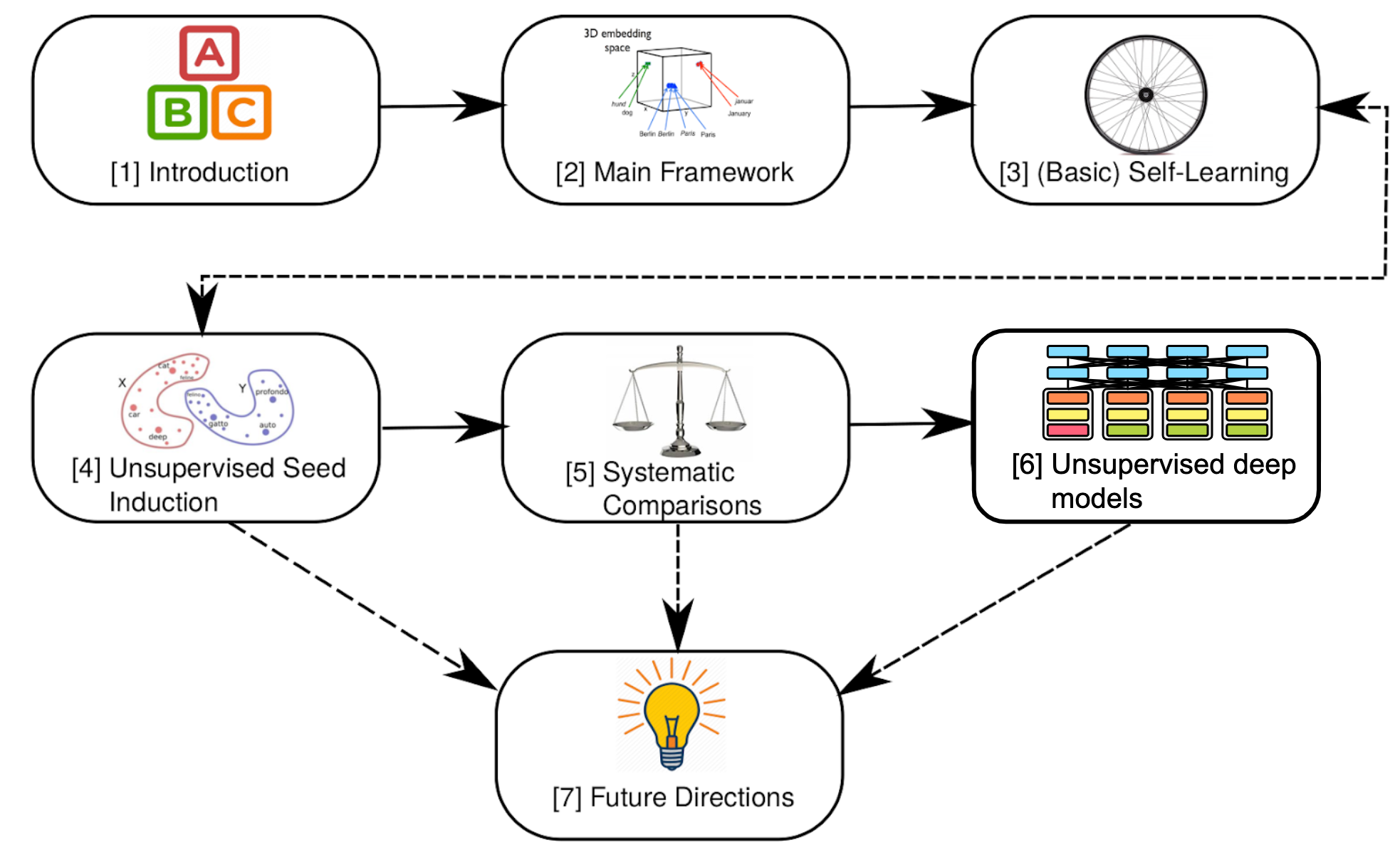

The tutorial was organised by Ivan Vulić, Anders Søgaard, and me. In this post, I highlight key insights and takeaways and provide additional context and updates based on recent work. In particular, I cover unsupervised deep multilingual models such as multilingual BERT. You can see the structure of this post below:

The slides of the tutorial are available online.

Cross-lingual representation learning can be seen as an instance of transfer learning, similar to domain adaptation. The domains in this case are different languages.