Hypothesis Test for Comparing Machine Learning Algorithms

Last Updated on September 1, 2020

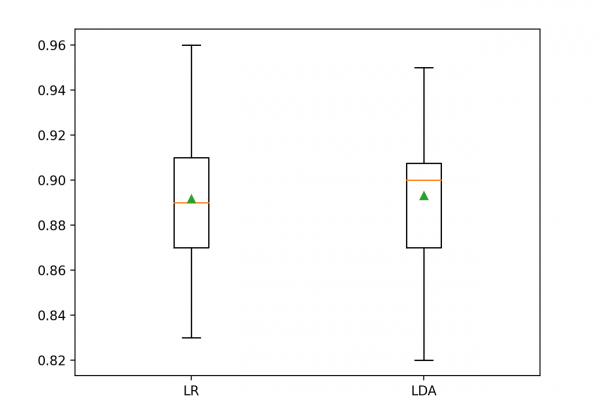

Machine learning models are chosen based on their mean performance, often calculated using k-fold cross-validation.

The algorithm with the best mean performance is expected to be better than those algorithms with worse mean performance. But what if the difference in the mean performance is caused by a statistical fluke?

The solution is to use a statistical hypothesis test to evaluate whether the difference in the mean performance between any two algorithms is real or not.

In this tutorial, you will discover how to use statistical hypothesis tests for comparing machine learning algorithms.

After completing this tutorial, you will know:

- Performing model selection based on the mean model performance can be misleading.

- The five repeats of two-fold cross-validation with a modified Student’s t-Test is a good practice for comparing machine learning algorithms.

- How to use the MLxtend machine learning to compare algorithms using a statistical hypothesis test.

Kick-start your project with my new book Statistics for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

To finish reading, please visit source site

To finish reading, please visit source site