10 ML & NLP Research Highlights of 2019

This post gathers ten ML and NLP research directions that I found exciting and impactful in 2019.

For each highlight, I summarise the main advances that took place this year, briefly state why I think it is important, and provide a short outlook to the future.

The full list of highlights is here:

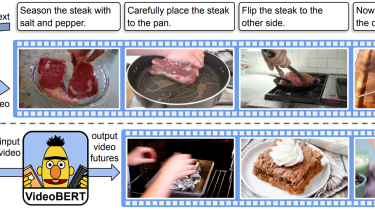

- Universal unsupervised pretraining

- Lottery tickets

- The Neural Tangent Kernel

- Unsupervised multilingual learning

- More robust benchmarks

- ML and NLP for science

- Fixing decoding errors in NLG

- Augmenting pretrained models

- Efficient and long-range Transformers

- More reliable analysis methods

What happened? Unsupervised pretraining was prevalent in NLP this year, mainly driven by BERT (Devlin et al.,